Patience sort and the Longest increasing subsequence

Play cards and watch TV

In 1999 The Bulletin of the American Mathematical Society published a paper by David Aldous and Persi Diaconis entitled: “Longest Increasing Subsequences: From Patience Sorting to the Baik-Deift-Johansson Theorem”.

In case that sounds heavy going, the authors kick off with a card game.

Take a deck of cards labeled 1, 2, 3, … , n. The deck is shuffled, cards are turned up one at a time and dealt into piles on the table, according to the rule

- A low card may be placed on a higher card (e.g. 2 may be placed on 7), or may be put into a new pile to the right of the existing piles.

At each stage we see the top card on each pile. If the turned up card is higher than the cards showing, then it must be put into a new pile to the right of the others. The object of the game is to finish with as few piles as possible.

And if this still sounds too mathematical (when did you last find a deck of cards labelled 1 through n?) Aldous and Diaconis suggest.

To play with real cards one needs to linearly order the 52 cards, e.g. by putting suits in the bridge-bidding order ♣ ♦ ♥ ♠. This mindless form of solitaire is then quite playable, perhaps while watching television.

As a target they recommend aiming for 9 piles, which, combined with an optimal strategy, gives you roughly a 5% chance of winning. In what follows I’ll also be adopting the bridge convention that Aces are high.

A greedy strategy turns out to be optimal — otherwise you’d probably need to switch off the TV in order to concentrate. What’s more, this same strategy discovers the longest increasing subsequence of cards contained within the shuffled deck.

Greedy Algorithms

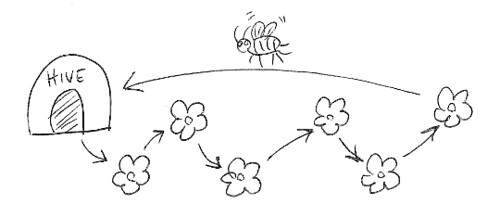

In computer science a greedy algorithm always makes the choice that looks best at that particular moment. Typically greedy algorithms are easy to understand and swift to operate — but that doesn’t make them optimal. A traveling salesman might greedily pick the closest unvisited city at every stage of his journey. The graphic shows a busy bee zig-zagging from flower to flower, always choosing the closest, when clearly a looping route would be better.

For the patience sort card game a greedy strategy would be to place each card on the leftmost pile on which it can go, according to the rules of the game. A clever argument proves this strategy leads to an optimal solution.

Greedy patience sorting

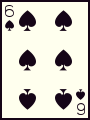

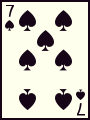

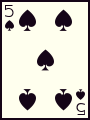

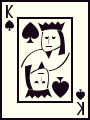

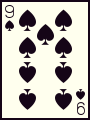

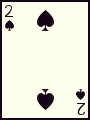

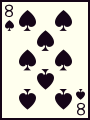

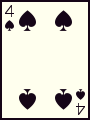

The argument hinges on increasing subsequences of cards within the shuffled deck. For example, if we restrict our attention to the spades suit, then given the sequence:

an increasing subsequence would be:

and a longest increasing subsequence is:

(Note in passing that there may be more than one longest increasing subsequence — we could replace the 5 for the 6 in the example above. This is why I’ll try to say a longest increasing subsequence instead of the longest increasing subsequence.)

If we define L(π) to be the length of the longest increasing subsequence of a permutation of our card deck, π, then Aldous and Diaconis show the following holds.

Lemma 1. With deck π, patience sorting played with the greedy strategy ends with exactly L(π) piles. Furthermore, the game played with any legal strategy ends with at least L(π) piles. So the greedy strategy is optimal and cannot be improved by any look-ahead strategy.

Proof. If cards a1 < a2 < … < al appear in increasing order, then under any legal strategy each ai must be placed in some pile to the right of the pile containing ai-1, because the card number on top of that pile can only decrease. Thus the final number of piles is at least l, and hence at least L(π). Conversely, using the greedy strategy, when a card c is placed in a pile other than the first pile, put a pointer from that card to the currently top card c′ < c in the pile to the left. At the end of the game, let al be the card on top of the rightmost pile l. The sequence a1 ← a2 ← … ← al-1 ← al obtained by following the pointers is an increasing subsequence whose length is the number of piles.

What a fabulous result! Despite the mathematical language, this proof actually consists of an algorithm for finding L(π), and indeed for determining an actual longest increasing subsequence of π.

Implementation

Coding this up in Python we get:

import bisect

import defaultdict

# We want a maximum function which accepts a default value

from functools import partial, reduce

maximum = partial(reduce, max)

def patience_sort(xs):

'''Patience sort an iterable, xs.

This function generates a series of pairs (x, pile), where "pile"

is the 0-based index of the pile "x" should be placed on top of.

Elements of "xs" must be less-than comparable.

'''

pile_tops = list()

for x in xs:

pile = bisect.bisect_left(pile_tops, x)

if pile == len(pile_tops):

pile_tops.append(x)

else:

pile_tops[pile] = x

yield x, pile

def longest_increasing_subseq_length(xs):

'''Return the length of the longest increasing subsequence of xs.

>>> longest_increasing_subseq_length(range(3))

3

>>> longest_increasing_subseq_length([3, 1, 2, 0])

2

'''

return 1 + maximum((pile for x, pile in patience_sort(xs)), -1)

def longest_increasing_subsequence(xs):

'''Return a longest increasing subsequence of xs.

(Note that there may be more than one such subsequence.)

>>> longest_increasing_subsequence(range(3))

[0, 1, 2]

>>> longest_increasing_subsequence([3, 1, 2, 0])

[1, 2]

'''

# Patience sort xs, stacking (x, prev_ix) pairs on the piles.

# Prev_ix indexes the element at the top of the previous pile,

# which has a lower x value than the current x value.

piles = [[]] # Create a dummy pile 0

for x, p in patience_sort(xs):

if p + 1 == len(piles):

piles.append([])

# backlink to the top of the previous pile

piles[p + 1].append((x, len(piles[p]) - 1))

# Backtrack to find a longest increasing subsequence

npiles = len(piles) - 1

prev = 0

lis = list()

for pile in range(npiles, 0, -1):

x, prev = piles[pile][prev]

lis.append(x)

lis.reverse()

return lis

Implementation notes

- The patience sort algorithm has been separated from from the subsequent processing of the moves it generates.

- I’ve shown a couple of clients of

patience_sort(). The first simply counts the number of piles used. The second maintains the back-link infrastructure required to recover a longest increasing subsequence, and returns such a subsequence. I also wrote a third client which generates some of the graphics on this page. - The

bisectmodule provides Pythonic support for binary searching sorted sequences. In this case, the cards on top of the piles remain in sorted order. - You may be wondering why

longest_increasing_subseq_length()doesn’t just check the length ofxsto handle the empty input case. That’s because patience sort is a greedy algorithm, which is happy to process any iterable, not just a sequence of known length. Patience_sort()relies on the elements of the input sequencexsbeing less than comparable. Well, obviously we need to be able to compare things to sort them! Usually, though, it’s more flexible to allow clients to pass in a comparison function, as modelled by standard Python functions likesort()andmax(). I’ve not done this here because thebisectmodule doesn’t (yet) allow clients to pass in custom less-than functions.

Although the cards which end up on top of the piles at the end of the sorting phase do form an increasing sequence, this sequence may not be a subsequence of the original shuffled deck. We need to maintain back-pointers, implemented here as list indices, to recover an actual longest increasing subsequence.

At the end of the patience sort phase our deck of cards is not fully sorted: typically, we’d expect to end up with a dozen or so sorted piles, which we’d need to merge to complete the sorting. The card with the lowest value (the 2 of clubs for a full deck) should always ends up on top of the left-most pile — a simple sanity-check on any patience sorting routine.

A deck of cards contains no duplicated values, but patience sorting works perfectly well without this restriction. Use bisect_left() in patience_sort() (as shown) to find a strictly increasing subsequence; bisect_right() would find a sequence which never decreases.

Streaming results

The patience_sort() function yields results as soon as it finds them. This stream-based approach works nicely with a greedy algorithm. For example, an impatient player hoping to complete the game using just 9 piles would give up as soon as a 10th pile gets created.

def win(deck):

'''Return True if the input deck wins at patience sort, False otherwise.'''

# (Note: piles are zero based)

return all(pile < 9 for card, pile in patience_sort(deck))

We can combine this function with random shuffling to experimentally investigate the claim that aiming for 9 piles gives roughly a 5% chance of winning.

def psort_win_ratio(deck):

'''Patience sorts a deck of cards, yielding (wins, tries) pairs.'''

wins = 0

for tries in itertools.count(1):

random.shuffle(deck)

if win(deck):

wins += 1

yield wins, tries

How many tries before we win 1000 times? On this occasion, 18539, suggesting a slightly better than 5% chance of winning.

>>> results = psort_win_ratio(list(range(52))) >>> next((w, t) for w, t in results if w > 999) (1000, 18539)

Animation

The animation at the top of this article combines jQuery with CSS positioning. If it doesn’t work in your browser, my apologies. It demonstrates patience sorting using the optimal greedy strategy, then back-tracks to find the longest increasing subsequence. I haven’t shown the back pointers which need to be placed on the piles alongside the cards, and are then used during the back-tracking. You’ll have to imagine that, as each card is placed on a pile, a back-arrow connects it to the card on top of the pile to its left — a card which it succeeds in the sequence and exceeds in value.

Click on the graphic above to shuffle and patience sort a full deck of cards.

Complexity

This patience sort algorithm processes each card immediately. That doesn’t make it a linear algorithm — but it’s not that much worse. Selecting the pile on which to place each card involves a binary search through the P piles, giving an O(N log P) sorting phase. The final track back through the piles to determine the longest increasing subsequence is O(P). In the worst case the deck would already be in order, leading to N piles and O(N log N) performance.

Wikipedia points to some improvements to this if we know more about the distribution of input values.

Space requirements are also O(N).

Marathon results

You might be wondering: why bother with a binary search to locate the pile for a particular card? Surely it would be just as easy to scan the piles from left to right, stopping as soon as we reach one whose top card is higher than the one currently in play? Well, yes, and in fact I’ve taken this approach in my Javascript implementation — as far as I know, Javascript has no built-in binary search function, and when you’ve got an input size fixed at 52 items efficiency is not a major issue.

For larger inputs though, scanning through the piles from left to right would be O(P), and we’d end up with a quadratic longest subsequence algorithm.

Let’s now consider a larger input sequence: the 38096 runners who completed the 2008 New York City marathon. If we have a list of these runners, ordered by finishing time, what would be the longest sublist comprising runners who are getting younger? Below I’ve highlighted just such a sublist of the 8 athletes who clocked under two and a quarter hours.

- MARILSON GOMES DOS SANTOS, 31, M, 2:08:43

- ABDERRAHIM GOUMRI, 32, M, 2:09:07

- DANIEL RONO, 30, M, 2:11:22

- PAUL TERGAT, 39, M, 2:13:10

- ABDERRAHIME BOURAMDANE, 30, M, 2:13:33

- ABDI ABDIRAHMAN, 31, M, 2:14:17

- JOSH ROHATINSKY, 26, M, 2:14:23

- JASON LEHMKUHLE, 31, M, 2:14:30

I’ve been considering this very problem over the course of a mini-series of four articles. In the most recent episode I showed how a solution could be found as a special case of the more general longest common subsequence problem. That generality came at a price: a rough Python implementation took over an hour to run and even a C++ implementation took over 18 seconds.

How does our new longest_increasing_subsequence() function shape up? To try it out, all we need is a suitable less-than function.

class AgingRunner:

'''Class to represent runners keyed by age.'''

....

def __lt__(self, other):

'''Return True if this runner is *older* than the other.'''

return self.age > other.age

Finding the longest age-decreasing sublist took 430 milliseconds on a laptop computer with a 2GHz processor. The resulting sublist had 60 entries.

How about the longest sublist of athletes who aren’t getting any older? We could change patience_sort() to use bisect_right() instead of bisect_left(), or, as shown below, we can tweak our less than function.

class AgingRunner:

...

def __lt__(self, other):

"Return True if this runner *isn't younger* than the other."

return self.age >= other.age

This second sublist has length 1724, and took 630 milliseconds to find.

Applications

The longest increasing subsequence provides a measure of how close a sequence is to being sorted. In the general case, computing this length using the algorithms discussed in this article will be more efficient than finding the edit distance between the sequence and a sorted version of itself.

Wikipedia’s entry on patience sorting pointed me at the Bazaar version control system, which uses patience sorting in its differencing routines rather than the more commonly used edit distance algorithms. I’m not entirely sure what the code is up to but there’s certainly some patience sorting in there.

Fonts and Unicode

I generated the playing card graphics from Ryan Neaveill’s shareware playing card font. The Unicode standard defines 8 playing card symbols (PDF).

Michael Everson has formally submitted a paper to the Unicode Technical Committee entitled “Proposal to encode dominoes and other game symbols in the UCS” (PDF) — a paper which also makes use of Ryan Neaveill’s playing cards font for illustrations.

Related

This article is the fourth in a mini-series. Its predecessors are:

- Race within a race, which introduced the problem

- Ordered sublists, which showed a brute force approach

- Longest common subsequence, which discussed an important family of dynamic programming algorithms

I’ve also written a number of articles about streams, or lazily evaluated lists.