Elegance and Efficiency

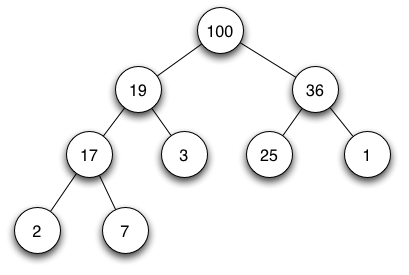

Elegant code is often efficient. Think of the heap data structure, for example, which always remains exactly as sorted as it needs to be, making it perfect for modelling priority queues. It’s both elegant and efficient — and dazzlingly so.

This article discusses the relationship between elegance and efficiency in more depth, and asks the question: Can inefficient code ever be elegant?

What is Elegant Code?

First, we should consider what’s meant by “elegant code”.

Anthony Williams discusses this very subject in a recent blog post (which is what got me thinking about it in the first place). Up front he admits the search for elegance is subjective and that the factors he lists are all “my opinion”. He also points out his list is not exhaustive. Nonetheless, it’s a good starting point, and I’d like to build on it. Let’s start by summarising his list here.

Factors affecting the elegance of software

- Does it work?

- Is it easy to understand?

- Is it efficient?

- Short functions

- Good naming

- Clear division of responsibility

- High cohesion

- Low coupling

- Appropriate use of OO and other techniques

- Minimal code

Appearance

I’m not sure this list completely nails elegance. For a start, there’s no mention of appearance — the way the code actually looks, on screen, or in print — which in my opinion is fundamental. Elegant code looks clean, balanced, self-consistent.

That’s one of the reasons I like Python: it’s hard to get away with poorly laid out code. Scheme, with its minimal syntax, also wins here. Java stands a good chance of doing well on this front too, thanks to a clearly stated set of coding conventions and excellent IDE support for applying these conventions.

Use of standard libraries

I’d also say that appropriate and even cunning use of the language’s standard libraries can add to code’s elegance. Williams hints at this with his mention of Minimal Code, though minimalism covers many other things.

As an example, if you’re using C++, you should take the time to become familiar with the standard library, and use it whenever possible. It works. It’s efficient. In fact it embodies pretty much everything Williams lists, with a few notable exceptions (no one could describe std::string as minimal, and std::auto_ptr is notoriously slippery). Use the standard library and you’ll save yourself code and time, and your own code will be the more elegant for it.

Planar vectors in Scheme

Let’s return to Scheme to illustrate my point about cunning use of standard libraries and consider exercise 2.46 from the Wizard Book.

Exercise 2.46. A two-dimensional vector v running from the origin to a point can be represented as a pair consisting of an x-coordinate and a y-coordinate. Implement a data abstraction for vectors by giving a constructor make-vect and corresponding selectors xcor-vect and ycor-vect. In terms of your selectors and constructor, implement procedures add-vect, sub-vect, and scale-vect that perform the operations vector addition, vector subtraction, and multiplying a vector by a scalar.

An obvious solution would be to model the 2-D vector as a pair.

(define make-vect cons)

(define xcor-vect car)

(define ycor-vect cdr)

(define (add-vect v w)

(make-vect (+ (xcor-vect v) (xcor-vect w))

(+ (ycor-vect v) (ycor-vect w))))

(define (sub-vect v w)

(make-vect (- (xcor-vect v) (xcor-vect w))

(- (ycor-vect v) (ycor-vect w))))

(define (scale-vect s v)

(make-vect (* s (xcor-vect v))

(* s (ycor-vect v))))

An elegant alternative builds on Scheme’s support for complex numbers.

;; represent 2-D vect using a complex number (define make-vect make-rectangular) (define xcor-vect real-part) (define ycor-vect imag-part) (define add-vect +) (define sub-vect -) (define scale-vect *) ;; some other vector operations come for free (define magnitude-vect magnitude) (define make-vect-from-polar-coords make-polar) (define angle-vect angle)

Minimalism and Simplicity

Elegance and beauty are not the same, though perhaps elegant forms a subset of beautiful. Elegance carries the additional connotation of simplicity, which itself correlates with minimalism. If I were forced to select the single item from Williams’ list most closely aligned to elegance, I’d go for minimalism: allowed my own choice, it would be simplicity.

Williams notes a couple of ways you can remove to improve:

- avoid unnecessary layering

- eliminate duplication

We’ve already added:

- use standard libraries

Kevlin Henney gives minimalism more careful attention in a series of articles. Omit Needless Code promotes:

Code that is simple and clear, brief and direct.

Henney illustrates his points with some elegant examples which reinforce my own claims about the C++ standard library

As an example, the common task of counting words in a text file or stream can be reduced to a single statement of executable C++ code [Henney2001c] when built on the appropriate abstractions:

typedef std::istream_iterator<std::string> in; std::cout << std::distance(in(std::cin), in());Want to count characters instead?

typedef std::istreambuf_iterator<char> in; std::cout << std::distance(in(std::cin), in());Or lines?

typedef std::istreambuf_iterator<char> in; std::cout << std::count(in(std::cin), in(), '\n');These fragments are all compact and fluffless, crisp and essential.

Efficiency and Elegance?

Efficiency comes high on Williams’ list, right after correctness, which shouldn’t be a surprise to anyone who writes code for a living. Surely code which doesn’t run fast enough is about as useful as code which doesn’t work? You could even note that efficiency is yet another aspect of minimalism: in this case, it’s the machine’s resource consumption you’d like to reduce.

I’m not convinced, though. It’s true, many of the most elegant algorithms happen to be efficient too — and may even have arisen from the quest for efficiency. Thus the standard quicksort algorithm has virtually no space overhead, and as a general purpose sorting algorithm, really can’t be beaten. Similarly the heap, as already mentioned, is a lean clean implementation of a priority queue. But I don’t think elegance implies efficiency. I’d even suggest that something could be elegant but of no practical use, at least not on today’s hardware.

The downside of efficiency is that it can be at odds with simplicity and minimalism. Consider the sad fate of boost::lexical_cast, a general purpose conversion function. If I go back to early Boost releases I find code which reads like this.

template<typename Target, typename Source>

Target lexical_cast(Source arg)

{

# ifndef BOOST_NO_STRINGSTREAM

std::stringstream interpreter;

# else

std::strstream interpreter; // for out-of-the-box g++ 2.95.2

# endif

Target result;

if(!(interpreter << arg) || !(interpreter >> result) ||

!(interpreter >> std::ws).eof())

throw bad_lexical_cast();

return result;

}

For brevity I’ve omitted file headers, include guards and the unexceptional definition of boost::bad_lexical_cast. Even with these present, the file runs to just 68 lines long, and provides an elegant example of what generic C++ code can do. The body of lexical_cast itself is a readable one-liner, tainted only by a preprocessor workaround for non-compliant compilers.

Wind forwards to 2007, and this small stain has spread across the entire library, which, after tuning for correctness, portability and efficiency now weighs in at well over 1K lines of code. Here’s a flavour of the latest greatest lexical_cast, which is far too long to include in its entirety.

namespace detail // lcast_put_unsigned

{

// I'd personally put lcast_put_unsigned in .cpp file if not

// boost practice for header-only libraries (Alexander Nasonov).

template<typename T, typename CharT>

CharT* lcast_put_unsigned(T n, CharT* finish)

{

CharT thousands_sep = 0;

#ifdef BOOST_LEXICAL_CAST_ASSUME_C_LOCALE

char const* grouping = "";

std::size_t const grouping_size = 0;

#else

std::locale loc;

typedef std::numpunct<CharT> numpunct;

numpunct const& np = BOOST_USE_FACET(numpunct, loc);

std::string const& grouping = np.grouping();

std::string::size_type const grouping_size = grouping.size();

if(grouping_size)

thousands_sep = np.thousands_sep();

#endif

std::string::size_type group = 0; // current group number

char last_grp_size = grouping[0] <= 0 ? CHAR_MAX : grouping[0];

// a) Since grouping is const, grouping[grouping.size()] returns 0.

// b) It's safe to assume here and below that CHAR_MAX

// is equivalent to unlimited grouping:

#ifndef BOOST_NO_LIMITS_COMPILE_TIME_CONSTANTS

BOOST_STATIC_ASSERT(std::numeric_limits<T>::digits10 < CHAR_MAX);

#endif

char left = last_grp_size;

do

{

if(left == 0)

{

++group;

if(group < grouping_size)

{

char const grp_size = grouping[group];

last_grp_size = grp_size <= 0 ? CHAR_MAX : grp_size;

}

left = last_grp_size;

--finish;

*finish = thousands_sep;

}

--left;

--finish;

int const digit = static_cast<int>(n % 10);

int const cdigit = digit + lcast_char_constants<CharT>::zero;

*finish = static_cast<char>(cdigit);

n /= 10;

} while(n);

return finish;

}

}

I’m not saying that the changes to boost::lexical_cast are bad: after all, users of the library get software which does the right thing more often and more quickly — all without any client-side changes. That’s one of the benefits of using a layered software stack. Rather, I present this as an example of the tension between efficiency and elegance. Somewhere along the line, an elegant piece of code got buried.

It’s also interesting that, in this case, even “does-it-work” counteracts elegance. We noted that boost::lexical_cast@v1.22 became tainted in its eagerness to work with legacy compilers. The current version makes far greater concessions. It’s a reminder — as if any were needed — that we programmers have to keep our feet on the ground and aim for pragmatic solutions. Perfection is rarely possible, elegance occasional.

Elegance and Inefficiency?

We’ve demonstrated the tension between elegance and efficiency, but could blatantly inefficient code ever claim to be elegant? The original elegant implementation of lexical_cast may not have been optimally tuned for all possible inputs (it’s meant to be generic code, after all), but it could hardly be described as inefficient.

We’re going to develop some code which I’ll claim is elegant despite being inefficient. To get us started, let’s consider another problem we can skin in more than one way: how do we determine if a book forms a lipogram? (A lipogram is a piece of text written avoiding the use of a particular character, the letter E for example, and full length books really have been written — and even translated — which adhere to this constraint.)

We’ll pose the problem in C++. Here’s the function prototype.

#include <string> #include <vector> typedef std::string word; typedef std::vector<word> book; // Return true if the input text avoids using any characters // in 'avoid', false otherwise. // Example call: // bool const lipo = is_lipogram(text, "Ee"); bool is_lipogram(book const & text, word const & avoid);

What we have here might be seen as a loop within a loop within a loop: for each word in the book, for each character in that word, check against each character in the string of characters to be avoided. A match against an avoided character means we know our book isn’t a lipogram, and we can return false; but if we reach the end of our book without such a match, we can return true.

is_lipogram1

We can code this up:

typedef word::const_iterator word_iter;

typedef book::const_iterator book_iter;

bool is_lipogram(book const & text, word const & avoid)

{

// Handle edge case -- empty book

if (text.empty())

{

return true;

}

// Handle edge case -- nothing to avoid

if (avoid.empty())

{

return true;

}

for (book_iter w = text.begin(); w != text.end(); ++w)

{

for (word_iter c = w->begin(); c != w->end(); ++c)

{

for (word_iter a = avoid.begin(); a != avoid.end(); ++a)

{

if (*c == *a)

{

return false;

}

}

}

}

return true;

}

This painstaking chunk of code reads like a direct transcription of the way an unfortunate human proof-reader might approach the task, one finger tracking through the text, word by word, character by character, another finger repeatedly working through the characters to be avoided. It fails the elegance test on a number of counts:

- Not minimal. The edge cases do not merit special treatment. Normal processing of the (nested) main loop handles empty inputs just fine.

- Failure to use the standard library. The

std::stringclass is big enough to support searches for characters in a string directly, allowing us to remove a couple of layers of nesting. - Clumsy. The function has four separate exit points.

Perhaps none of these charges seem too bad in such a small function, but small functions have a tendency to grow into larger ones, and flaws, in particular, scale rapidly.

is_lipogram2 & 3

Here’s a standard-library-aware improvement.

bool is_lipogram(book const & text, word const & avoid)

{

for (book_iter w = text.begin(); w != text.end(); ++w)

{

if (w->find_first_of(avoid) != std::string::npos)

{

return false;

}

}

return true;

}

Many programmers would leave it at that, but I still prefer to re-cast this particular variant as follows:

bool is_lipogram(book const & text, word const & avoid)

{

book_iter w = text.begin();

while (w != text.end() &&

w->find_first_of(avoid) == std::string::npos)

{

++w;

}

return w == text.end();

}

Rather than exit as soon as we detect a character in the avoid string, we keep reading as long as there’s text to read and we’ve avoided such characters. There’s not much in it, especially in such a small function, but my preference is to simplify the control flow.

is_lipogram4

We can remove the explicit iteration from our code by using the std::find_if algorithm, which accepts a predicate. In this case we want to find the first word which isn’t itself a lipogram. Combining the std::not1 function adaptor with a hand-written class deriving from std::unary_function<std::string const, bool> does the job.

This code demonstrates proper use of the STL predicates and adaptors, but it also reaches the limits of my personal comfort zone for using C++ in a functional programming style. The price paid for avoiding explicit iteration is just too high; clever though this code may be, I don’t find it elegant.

When I first coded up lipogram_word_tester, it derived from std::unary_function<word const &, bool>. This turns out to be wrong, or at least, it failed to compile with a typically cryptic diagnostic, and I’m still not sure why!

// Simple functor for use in lipogram algorithms

class lipogram_word_tester:

public std::unary_function<word const, bool>

{

public:

// Constructor. Caches a copy of the 'avoid' parameter.

lipogram_word_tester(word const & avoid)

: avoid(avoid)

{

}

// Function operator returns true if the word being tested

// omits characters from the 'avoid' string, false otherwise.

bool operator()(word const & to_test) const

{

return to_test.find_first_of(avoid)

== std::string::npos;

}

private:

// Private cache of characters to avoid.

word const avoid;

};

bool is_lipogram(book const & text, word const & avoid)

{

lipogram_word_tester const word_test(avoid);

return find_if(text.begin(), text.end(),

not1(word_test)) == text.end();

}

is_lipogram5

I would expect all four functions presented so far to be similarly efficient in terms of memory, stack, CPU cycles.

A recursive solution may require more stack: it depends on the compiler. We’ve now got two functions, and although each comprises just a single expression, the expression forming the body of the recursive helper function, is_lipo(), is tricky. I wouldn’t recommend this implementation.

bool is_lipo(book_iter wb, book_iter we, word const & avoid)

{

return wb == we ||

wb->find_first_of(avoid) == std::string::npos &&

is_lipo(++wb, we, avoid);

}

bool is_lipogram(book const & text, word const & avoid)

{

return is_lipo(text.begin(), text.end(), avoid);

}

is_lipogram6

Our final alternative is a clear winner on the three fronts which led us to reject our original implementation: it’s brief, it leans heavily on the standard library, it has just a single exit point — in fact, is just a single expression.

bool is_lipogram(book const & text, word const & avoid)

{

return accumulate(text.begin(), text.end(), std::string()

).find_first_of(avoid) == std::string::npos;

}

Does it qualify as elegant? I’d say so, yes. Sadly, though, its inefficiency rules it out as a heavy-duty lipogram checker. The std::string class is not designed for repeated addition — which is what std::accumulate does.

Winding Up

Actually none of the C++ lipogram checkers are much use, except in the case when we’re certain our book is written in 7-bit ASCII. A lipogram which avoids the letter E should also avoid its various accented forms: é, è, ê, ë, É, È, Ê, Ë, …

A heavy-duty lipogram checker needs to work in Unicode and, for C++ at least, will have to establish some ground rules for input encoding schemes. The current C++ standard (C++98) has little to say about Unicode. We’d be better off using a more Unicode aware language, such as Java.

Python allows us to create a character stream which accumulates all the characters in all the words, but yields them lazily. The function below uses itertools.chain to flatten the input words (which themselves may be a stream or an in-memory collection) into a character stream. The built-in all function reads exactly as far into this stream as it needs to. In other words, we’ve got a Python counterpart to our final C++ algorithm which is both efficient (efficient for Python that is!) and equally happy with Unicode and ASCII.

import iterools

def is_lipogram(words, avoid):

return all(ch not in avoid

for ch in itertools.chain(*words))

C++ Source Code

#include <cassert>

#include <functional>

#include <iostream>

#include <numeric>

#include <set>

#include <string>

#include <vector>

namespace

{

typedef std::string word;

typedef word::const_iterator word_iter;

typedef std::vector<word> book;

typedef book::const_iterator book_iter;

typedef bool (* lipo_fn)(book const &, word const &);

// Return true if the input text avoids using any characters

// in 'avoid', false otherwise.

bool is_lipogram1(book const & text, word const & avoid)

{

// Handle edge case -- empty book

if (text.empty())

{

return true;

}

// Handle edge case -- nothing to avoid

if (avoid.empty())

{

return true;

}

for (book_iter w = text.begin(); w != text.end(); ++w)

{

for (word_iter c = w->begin(); c != w->end(); ++c)

{

for (word_iter a = avoid.begin(); a != avoid.end(); ++a)

{

if (*c == *a)

{

return false;

}

}

}

}

return true;

}

bool is_lipogram2(book const & text, word const & avoid)

{

for (book_iter w = text.begin(); w != text.end(); ++w)

{

if (w->find_first_of(avoid) != std::string::npos)

{

return false;

}

}

return true;

}

bool is_lipogram3(book const & text, word const & avoid)

{

book_iter w = text.begin();

while (w != text.end() &&

w->find_first_of(avoid) == std::string::npos)

{

++w;

}

return w == text.end();

}

// Simple functor for use in lipogram algorithms

class lipogram_word_tester:

public std::unary_function<std::string const, bool>

{

public:

// Constructor. Caches a copy of the avoid parameter.

lipogram_word_tester(word const & avoid)

: avoid(avoid)

{

}

// Function operator returns true if the word being tested

// omits characters from the 'avoid' string, false otherwise.

bool operator()(word const & to_test) const

{

return to_test.find_first_of(avoid)

== std::string::npos;

}

private:

// Private cache of characters to avoid.

word const avoid;

};

bool is_lipogram4(book const & text, word const & avoid)

{

lipogram_word_tester const word_test(avoid);

return find_if(text.begin(), text.end(),

not1(word_test)) == text.end();

}

bool is_lipo5(book_iter wb, book_iter we, word const & avoid)

{

return wb == we ||

wb->find_first_of(avoid) == std::string::npos &&

is_lipo5(++wb, we, avoid);

}

bool is_lipogram5(book const & text, word const & avoid)

{

return is_lipo5(text.begin(), text.end(), avoid);

}

bool is_lipogram6(book const & text, word const & avoid)

{

return accumulate(text.begin(), text.end(), std::string()

).find_first_of(avoid) == std::string::npos;

}

void read_book(book & text, std::istream & input)

{

typedef std::istream_iterator<word> in;

std::copy(in(input), in(), back_inserter(text));

}

// Function-like class used for lipo_fn evaluation.

class lipo_functor

{

public:

// Construct an instance of this class, caching lipo_fn parameters.

lipo_functor(book const & text, word const & avoid)

: text(text)

, avoid(avoid)

{

}

// Return the result of applying is_lipo to the cached parameters.

bool operator()(lipo_fn is_lipo)

{

return is_lipo(text, avoid);

}

private:

book const & text;

word const & avoid;

};

void check_if_lipogram(std::ostream & report,

book const & text, word const & avoid)

{

typedef std::set<bool> answers;

lipo_fn const lipo_fns[] =

{

is_lipogram1,

is_lipogram2,

is_lipogram3,

is_lipogram4,

is_lipogram5,

is_lipogram6,

};

lipo_functor lipo_func(text, avoid);

answers results;

lipo_fn const * const end = lipo_fns + sizeof lipo_fns / sizeof *lipo_fns;

transform(lipo_fns, end, inserter(results, results.end()), lipo_func);

assert(results.size() == 1);

report << "Is " << (*results.begin() ? "" : "not ")

<< "a lipogram" << '\n';

}

} // end anonymous namespace

int main()

{

book text;

word const avoid = "Ee";

read_book(text, std::cin);

check_if_lipogram(std::cout, text, avoid);

return 0;

}